Troubleshooting zfs online not working

Yesterday the first 10TB recertified drive tested all okay. I’ve decided to upgrade the four 3TB drives in my pool to 10TB to expand capacity.

I have a 16 bay hot-swap chassis with 13 slots filled.

- 4x 3TB (raidz1, tank)

- 4x 8TB (raidz1, tank)

- 4x 14TB (raidz1, tank)

- A single 3TB drive as ‘scratch’ disk for temporary backup data

I really want to have the same vdev drives stacked in the same column physically. I’m like that. So my first order of business was to move one of the 3TB drives to a free slot to make room for the 10TB disks.

So I performed a zfs offline tank <id> on the disk, took it out and stuck it in another drive bay. dmesg clearly showed me the new disk was ready an available:

sd 0:0:18:0: [sdi] Attached SCSI disk

Since it’s the same drive, with the same GUID, I expected ZFS to recognise this disk, online it and then resilver it as necessary. I’ve seen this work before with one of the 14TB drives when I had a bad SATA cable. But instead, sadness:

tank DEGRADED

raidz1-0 DEGRADED

wwn-0x50014ee2bb55b28f OFFLINE

wwn-0x50014ee265ff666a ONLINE

wwn-0x50014ee210aaa174 ONLINE

wwn-0x50014ee265ff7131 ONLINE

So, let’s online the disk manually, and get the vdev back in working order!

$ zfs online tank wwn-0x50014ee2bb55b28f

(no output)

That didn’t work.

$ zfs online tank /dev/disk/by-id/wwn-0x50014ee2bb55b28f

(no output)

$ zfs online tank sdi

(no output)

$ zfs online tank /dev/sdi

(no output)

$ zfs online tank <GUID>

(no output)

No dice.

The obvious solution would be to wipe the 3TB disk and replace it with itself, doing a full 3TB resilver on it. But, that would be too easy. Also, onlining a disk should work - so why isn’t it.

At this point, I had exhausted my Google-fu. I even asked ChatGPT, but it didn’t come up with anything I hadn’t tried yet.

Next step: IRC

I hopped on #zfs on Libera Chat and dropped my question. A few people were online and started giving the obvious answers. Together we decided that it’s not working. With one of the people (thanks, Rich) in #zfs I dove into partition tables, labels, cache files, etc. Turns out Rich does quite some work on ZFS and he was of great help investigating the issue.

Then, I thought to check the exit code for the zfs online command.

$ zfs online tank wwn-0x50014ee2bb55b28f

(no output)

$ echo $?

1

Aah! So, zfs online exists with an error. But why?

Well, it was helpful in the sense that zpool online now says it cannot online the drive, but the exact reason was still unknown. I then tried to, instead of the whole disk, online the partition. Let me explain.

Device Start End Sectors Size Type

/dev/sdb1 128 4194431 4194304 2G FreeBSD swap

/dev/sdb2 4194432 5860533127 5856338696 2.7T FreeBSD ZFS

So this is how FreeNAS (based on FreeBSD) partitioned the disk when it was added to the original pool. So now, instead of adding /dev/sdb I was going to try to online just the ZFS partition /dev/sdb2. Not that /dev/disk/by-id/wwn-0x50014ee2bb55b28f-part2 symlinks to /dev/sda2.

$ zpool online tank sdb2

cannot online sdb2: cannot relabel 'sdb2': unable to read disk capacity

In some way, this error makes sense, since I’m trying to online a partition and not a disk. Still, I threw the message into Google, which led me to an open issue on OpenZFS that says drives cannot be online’d when autoexpand=on for the pool.

Well, that would be easy to test:

$ zpool set autoexpand=off tank

$ zpool online tank <guid>

$ zpool set autoexpand=on tank

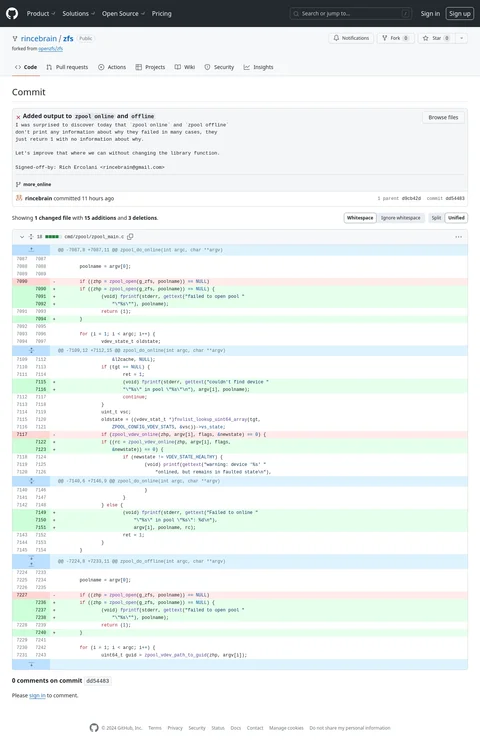

It took the better part of 15 seconds for the drive to sync up and resilver. Done! I think @behlendorf explained the root cause of this problem better that I can:

The core issue here is that since the pool was created on FreeBSD the partition layout differs slightly from what’s expected on Linux. This difference prevents ZFS from being able to auto-expand the device since it can’t safely re-partition it to use the additional space. Unfortunately, that results in the device being online at all.

There is nothing I can do to remedy this problem. The only way to “fix” it is to offline a disk with FreeBSD partitioning, wipe it and then replace it with itself so a new Linux compatible partition table is created.

For now, I’m going to replace the 3TB drives anyway. The new 10TB ones are getting the Linux compatible partition table anyway. That leaves my 8TB drives still in limbo, but since I now know the root cause of the problem, I can easily work around it when necessary.

Big shout out to Rich for assisting me with this issue! I probably wouldn’t have been able to figure it out with you.